Learn the differences between the different traffic management and distribution infrastructures: API Gateway vs Load Balancer vs Reverse Proxy.

Web application scaling

Over time, web-based applications and the services they offer have increased in both scale and complexity. Although it was the norm years ago, the idea of keeping a large project running on a single server is becoming less and less realistic.

To handle larger volumes of traffic we have two different forms of scaling: vertical scaling and horizontal scaling.

Vertical scaling

It basically consists of improving the hardware on which our project runs, adding more memory, more CPU, more hard disk, more network cards... In the past it was the cheapest way of scaling and nowadays it is an unrealistic option, since as the demand for the web service increases, there may come a point where vertical scaling is no longer sufficient to meet the performance and availability needs of the project.

Horizontal scaling

It consists of adding more servers or instances in a distributed manner to handle the load. This allows for greater flexibility and ability to adapt to changes in demand, as well as greater fault tolerance, since individual servers can fail without significantly degrading service availability.

Traffic Management and Distribution Infrastructures

It is here, in horizontal scaling strategies, where traffic management and distribution infrastructures play an important role, since thanks to them we can control the data flow and distribute the load efficiently among multiple servers or instances, thus guaranteeing optimum performance and high availability of the service.

Let's take a look at three of the best known ones: Reverse Proxy (Reverse proxy), API Gateway (Access door) and Load Balancer (load balancer).

If you have not had the need to use them, you may have doubts about what each of them does or how they differ, so let's take a look at the characteristics and uses of each of them.

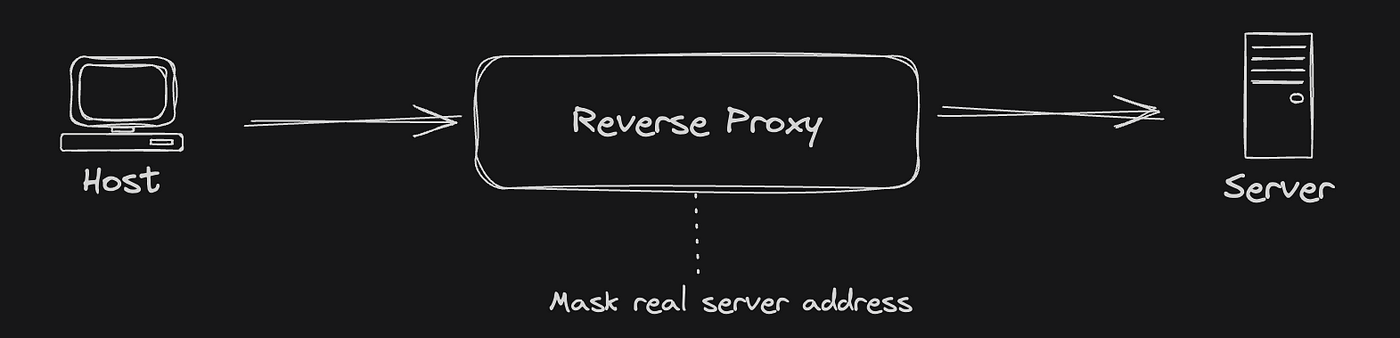

Reverse Proxy

We start with the simplest of the three, the reverse proxy or reverse proxy.

The reverse proxy is basically a server that acts as an intermediary between the clients and the target server(s). Instead of direct communication between client and server, the client connects to the server(s). reverse proxy and this is responsible for passing the request to the server and returning the corresponding response to the clients.

¿For what purpose do we use a reverse proxy?

- Security. It acts as a barrier between clients and target servers, hiding the internal structure of the network and providing an additional layer of security against malicious attacks.

- Caching of static content. Caches static content such as images, CSS files and JavaScript to reduce the load on the origin servers and improve page load times.

- SSL/TLS termination. It manages SSL/TLS connections from clients and terminates them at the proxy before forwarding requests to destination servers, simplifying SSL/TLS certificate management and improving server performance. This way the HTTPS connection is only between the client and the proxy. This is especially useful when the reverse proxy masks more than one server.

- Load balancing. Yes, that's right, the reverse proxy can be used as a load balancer, as it distributes incoming traffic among several destination servers to improve scalability and service availability.

The reverse proxy function is performed by most commercial web servers, such as NGINX, Apache y Microsoft IIS, as well as specific software such as HAProxy.

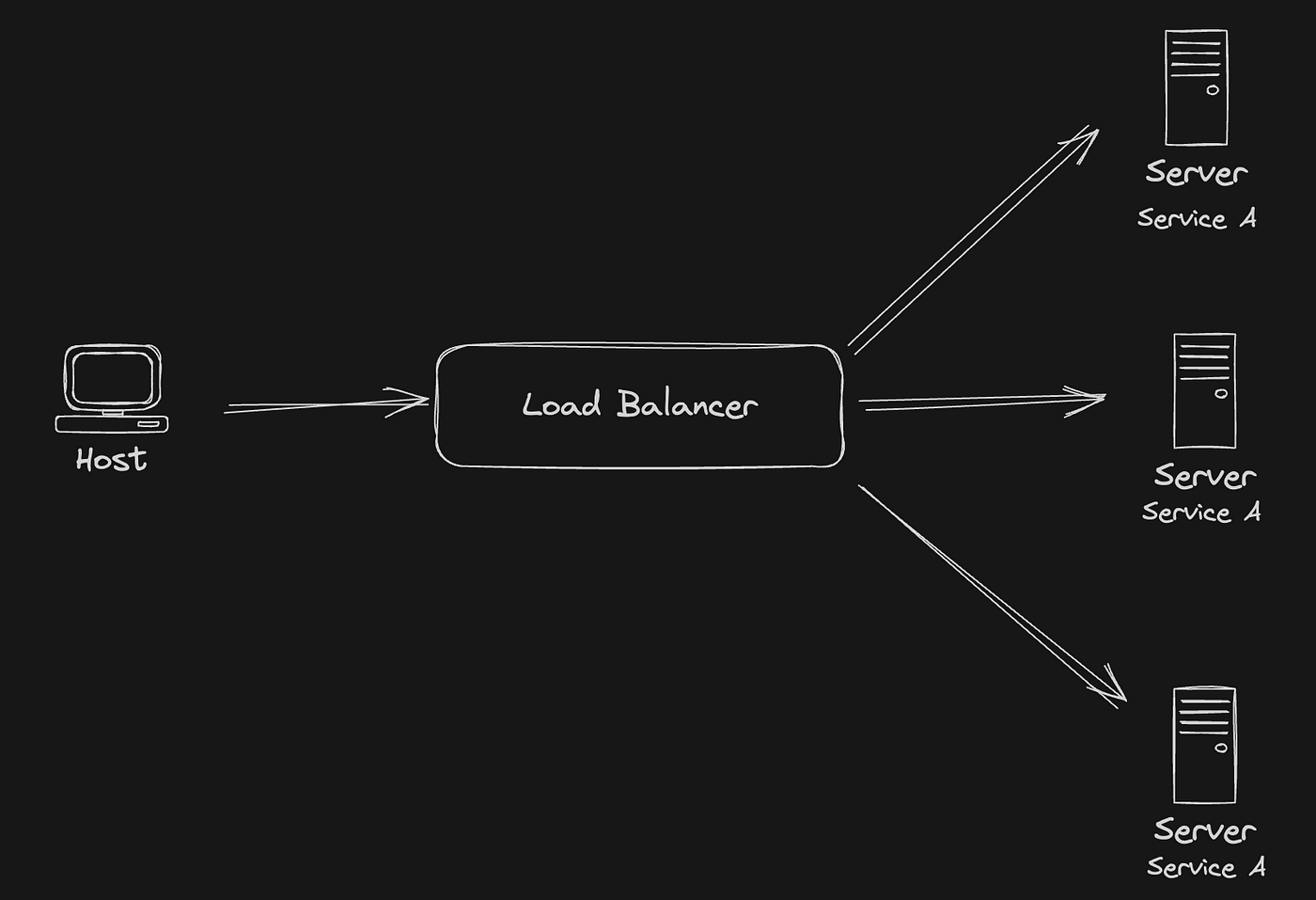

Load Balancer

A load balancer (or Load Balancer is a device or software that distributes incoming network traffic among multiple servers, with the objective of improving the performance, availability and reliability of a web application or service.

As in the case of the reverse proxy, acts as an intermediary between the client and (in this case) the servers, since it is not just one but several servers that hide the load balancer. As there are several servers, requests are parallelized and a much larger flow of requests can be handled.

¿For what purpose do we use a load balancer??

- Load distribution. It distributes network traffic among several servers to avoid overloading a single server and to ensure an equitable use of available resources.

- Improved performance. By distributing the workload among multiple servers, a load balancer can improve the response time and overall performance of an application or service.

- High availability. If a server fails, the load balancer can automatically redirect traffic to alternative servers that are functioning properly, ensuring continuous service availability.

- Scalability. It facilitates the dynamic addition or removal of servers according to load requirements, allowing the infrastructure to scale horizontally in a flexible manner.

¿How does a load balancer distribute the load?

Depending on your implementation, you can use simple load balancing algorithms, such as round-robin (each request is sent to the servers in sequence) or more advanced algorithms that consider the current server load (latency) among other factors.

The most common load distribution algorithms include:

- Round Robin. This algorithm distributes requests evenly among the servers in sequence. Each request is sent to the next server in the list, and when the last server is reached, it goes back to the beginning.

- Least Connections (Less Connections). This algorithm directs new requests to the server with the least amount of active connections at that time. It is useful to distribute the load more evenly, considering the actual load of each server.

- Least Response Time (Shorter Response Time). Similar to the Fewest Connections algorithm, this approach directs requests to the server that has the shortest response time at that time. It helps maximize responsiveness and overall system performance.

- IP Hashing (IP Hashing). This algorithm uses the client's IP address to determine which server to send the request to. In this way, all requests from a particular client are always sent to the same server, which can be useful for maintaining a user's session on a specific server.

- Weighted Round Robin (Round Robin Weighted). This algorithm assigns a weight to each server based on its processing capacity, and then distributes the requests according to these weights. Servers with higher capacity will receive a higher proportion of requests.

- Random (Random). This algorithm randomly chooses a server to handle each incoming request. Although simple, it may not be the most efficient option in terms of equal load distribution.

How do we handle sessions with a load balancer?

If the service that we are masking with our balancer requires a session, we run the risk that in the following requests the same client will be redirected to another server that does not have its session saved, and this may cause some discomfort to the user by constantly asking him to log in again.

For this purpose, load balancers have a feature known as sticky bit, The client is a client-server connection, which allows a client's requests to always be directed to the same server for the duration of a specific session, i.e., it ensures session persistence by associating a client with a particular server.

When the sticky bit, The load balancer uses a technique, such as the hashing of the customer's IP address or the insertion of an cookie in the client's browser to identify and associate the client with a specific server. As a result, all subsequent requests from that client during the same session are redirected to the same server, ensuring a consistent experience for the user and allowing sessions to persist correctly across multiple requests.

Load balancer implementations can be found in the main platforms cloud, as AWS Elastic Load Balancer (ELB), Microsoft Azure Load Balancer y Cloud Load Balancing of Google.

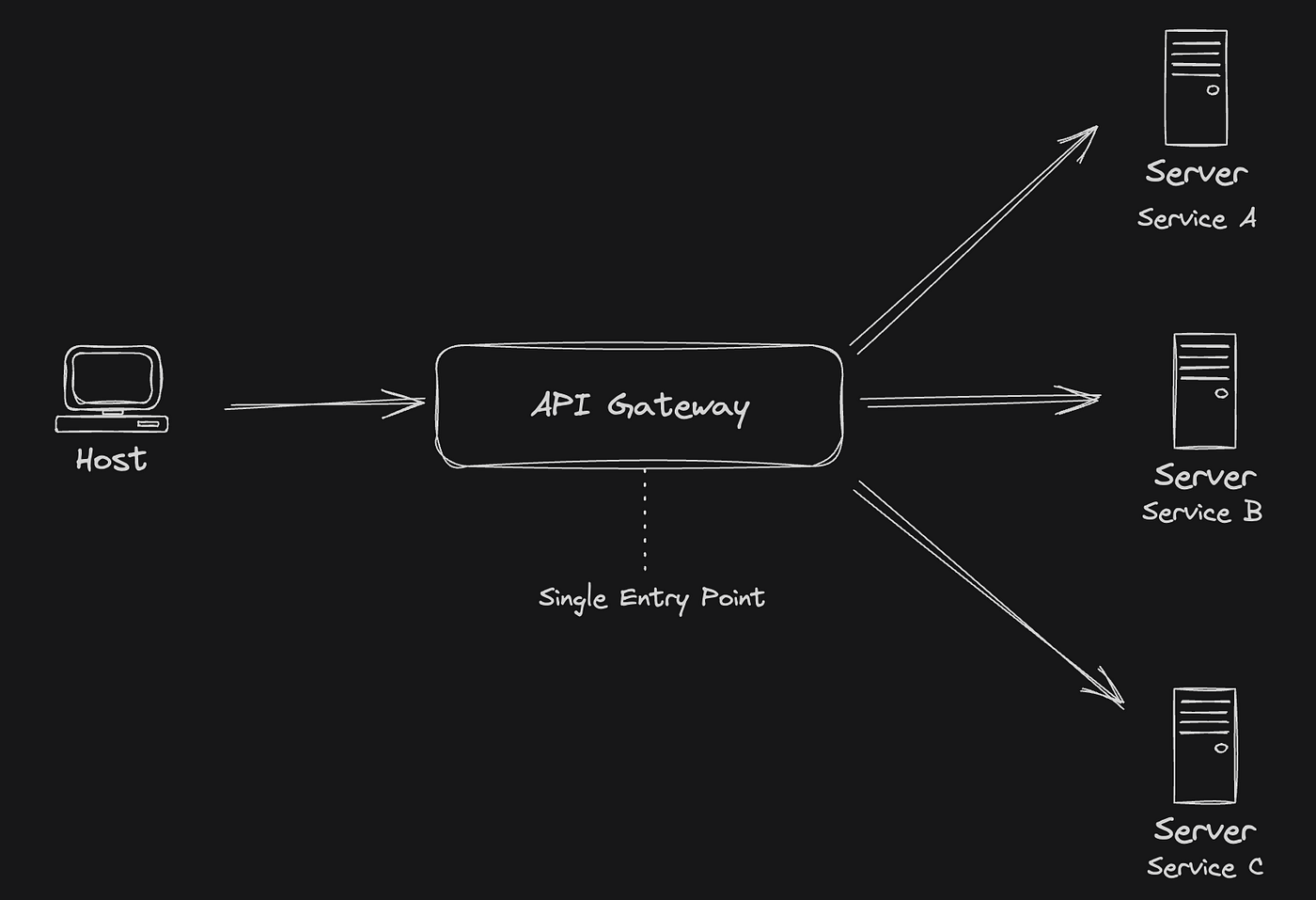

API Gateway

Finally, a API Gateway is a software component that acts as a single point of entry (single entry point) for a variety of services and APIs within a microservices or distributed services architecture. It functions as an intermediate layer between clients and multiple services, providing a unified interface and facilitating the management, security, monitoring and orchestration of requests and responses between them.

How does an API Gateway work?

The operation of an API Gateway is somewhat more complex than the previous cases. Basically, we can understand its similarities with the load balancer, but with a very important nuance: after a API Gateway there are different services that, in addition, can operate with different protocols. In fact, a API Gateway may be masking a service that internally uses a load balancer to distribute the load of the service among different instances or servers (we are of course talking about huge amounts of traffic).

Let's take a look at the basic steps of its operation:

- Receipt of applications. The API Gateway receives requests from clients and routes them to the corresponding services based on configured routing rules.

- Translation of protocols. You can translate communication protocols, for example, by converting HTTP requests into calls to internal services using protocols such as gRPC o Thrift.

- Authentication and authorization. Manages client authentication and authorization, verifying credentials and enforcing access policies before submitting requests to the underlying services.

- Data aggregation. It can combine multiple calls to internal services to form a single response to the customer, thus reducing latency and network traffic.

- Monitoring and analysis. Collects metrics and logs of requests and responses to facilitate monitoring, performance analysis and troubleshooting.

For what purpose do we use a load balancer?

- Unification of interfaces. It provides a single interface for customers, simplifying their interaction with a diverse set of internal services.

- Version management. It allows the introduction and version control of APIs, which facilitates the evolution of the underlying services without affecting clients.

- Centralized security. Implement centralized security policies, such as authentication, authorization and encryption, for all internal services.

- Orchestration and transformation. Enables orchestration of requests across multiple internal services and data transformation as needed.

- Scalability and availability. It can distribute the load evenly among multiple internal service instances, thus improving system scalability and availability.

Common problems

Such a complex infrastructure is not exempt from problems, let's see which are the most common ones:

- Performance bottlenecks. A API Gateway can become a bottleneck if it is not properly sized to handle the load of incoming requests.

- Configuration complexity. Configuring and maintaining routing rules, security policies and data transformations can become complex as the number of services and functionalities deployed increases.

- Single points of failure. If a API Gateway is the single point of entry for all services, a failure in it can affect the availability of the entire system.

- Network overload: Routing of all requests through the API Gateway can introduce additional network overhead, especially in large-scale distributed environments.

Again, we can find implementations of API Gateway in the main suppliers of cloud like AWS API Gateway, Azure API Management o Google Cloud API Gateway (Apigee), as well as Open Source solutions such as Kong.

Conclusions

As we have seen, although similar in purpose, these three traffic management and distribution infrastructures have very different uses and work in very different ways.

It is important to know how to choose which one we need in each case, since choosing the wrong one can result in adding extra complexity to our project, as well as costing us dearly (financially speaking).

Remember, always start with the minimum necessary, there will always be time to climb higher!